[

](https://substackcdn.com/image/fetch/$s_!-xpK!,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F6854f666-c31b-46d3-b83b-ebf65062c364_1279x720.png)

On January 30, 2026, a documentary about First Lady Melania Trump achieved something statistically impossible: it became simultaneously the most beloved and most despised film in the history of Rotten Tomatoes.

The numbers appear simple. Critics gave it 7%. Verified audiences gave it 99%. But simplicity is deceptive. What you’re looking at isn’t a gap in taste-it’s a 92-percentage-point chasm that represents the largest divergence in the platform’s two-decade history. And from the perspective of data science, algorithmic auditing, and basic statistical probability, it represents something else entirely: evidence of the most expensive reputation management campaign ever conducted for a documentary film.

Consider what 99% actually means. Not 99% approval-that’s vague, malleable. But 99% of verified ticket buyers, people who proved they purchased admission, rating the film positively. Out of every 100 paying customers, only one found it disappointing. Not mediocre. Not “fine for what it is.” Disappointing enough to leave a negative review.

This is the approval rating of “The Godfather.” Actually, it exceeds “The Godfather,” which sits at 98% on Rotten Tomatoes. The Melania documentary, according to its verified audience score, is more universally beloved than “The Shawshank Redemption” (also 98%). More cherished than “Paddington 2,” more acclaimed than “Toy Story,” more satisfying than every Pixar masterpiece and every crowd-pleasing triumph in cinema history.

Professional critics, meanwhile, called it “vapid,” “stultifying,” and-in the assessment that likely stung most-a “criminally shallow propaganda puff piece.” They found it to be an expensive exercise in hagiography, “pure absence” despite a staggering $75 million budget. The critical consensus wasn’t mixed. It was damning.

So you have a choice. Either you believe that audiences discovered a misunderstood masterpiece that every professional film critic in America somehow failed to recognize. Or you recognize what the algorithms already know: a 99% verified audience score for a 7% critics’ choice doesn’t reveal consensus. It reveals coordination.

The Baseline Problem

Human preference follows predictable patterns. Even for genuinely beloved films-the kind that define generations, that people watch repeatedly, that inspire genuine devotion-there’s variance. Some viewers find “The Godfather” too slow. Others think “Casablanca” is overrated. A percentage of the population will dislike anything, no matter how widely praised, because human taste is diverse and critical faculties vary.

Statistical models account for this. In any organic data set of 100 people rating a film, you expect a distribution. Not uniformity. Not near-unanimity. A bell curve, or at least the ragged outline of human disagreement.

The Melania documentary showed no such distribution. From 100 verified reviews through 500 verified reviews, the score remained locked at 99% without fluctuation. In a forensic analysis of 220 individual verified reviews, 97% were positive. Fewer than five negative reviews appeared in the sample.

This is what forensic analysts call a “binary spike”-a data visualization that should show variation but instead shows a near-vertical line. It’s the signature of a gamed system. And when you examine the temporal pattern, the coordination becomes more obvious: 63% of verified reviews appeared within a single 48-hour window.

In organic growth, reviews trickle in over days and weeks as word-of-mouth spreads, as different audiences discover the film, as people process their reactions. Temporal clustering-massive review volume concentrated in a brief period-is characteristic of “drip campaigns,” coordinated efforts where participants are scheduled to post feedback to manipulate public perception.

The Tell: When Verification Backfires

Rotten Tomatoes introduced verified ratings to solve a problem: review bombing. The platform wanted to prevent people who hadn’t seen a film from flooding it with negative reviews, particularly for controversial or politically charged releases. The solution seemed elegant. Require proof of ticket purchase through Fandango. Make people put money where their mouth is.

The unintended consequence: they created a system where reputation management firms and studios with sufficient resources could purchase credibility.

The math is straightforward. Amazon MGM reportedly spent $35 million marketing “Melania.” If you wanted to manufacture 500 verified reviews, you’d need 500 tickets. At an average price of $20 per ticket, that’s $10,000-roughly 0.03% of the marketing budget. Less than a rounding error.

Those tickets get distributed to accounts. Those accounts post reviews. The reviews get verified because the tickets are real. The “Verified Hot” badge appears on Rotten Tomatoes, signaling to casual browsers that this isn’t astroturfing-these are real people who really paid to see the film and really loved it.

Except investigation reveals patterns inconsistent with organic enthusiasm. Nearly all verified positive review accounts had no prior history on the platform. They were created specifically for this film. Profile pictures were absent. Usernames followed generic patterns: “First Name/Last Initial.” The review language itself showed suspicious repetition-phrases like “wonderful lady,” “elegant and sincere,” and “human warmth” appeared across dozens of reviews without specific details about the film’s structure, cinematography, or narrative approach.

The style of many reviews matched the output patterns of large language models rather than natural human writing. Brief, positive, vague. The kind of content you’d generate if you were running 500 micro-tasks through Amazon Mechanical Turk-a platform Amazon conveniently owns-and asking workers to write something nice about the First Lady’s documentary after verifying a ticket purchase.

The 1% Strategy

Here’s where the coordination becomes most visible: that single negative review out of 100. Professional reputation managers understand that 100% approval triggers immediate suspicion. It’s too perfect, too obvious, too clean. A 100% score screams “manipulation” to anyone paying attention.

But 99%? That’s the sweet spot. It suggests overwhelming enthusiasm while maintaining plausible deniability. Nearly everyone loved it, the score implies, but there were a couple of outliers-enough to make it seem real without damaging the narrative.

This is reputation management 101: introduce just enough noise to appear legitimate while maintaining the desired message. One carefully placed negative review per 100 positive ones creates the illusion of authenticity while preserving the “masterpiece” rating Amazon needs to market the film on Prime Video.

The IMDb Contradiction

If the 99% score represented genuine audience enthusiasm, you’d expect consistency across platforms. You don’t get it.

On IMDb, where verification isn’t required but which has its own algorithmic protections against manipulation, “Melania” hit a record low of 1.0 out of 10 during its opening weekend. It eventually stabilized at 1.3 after more than 22,000 votes. IMDb’s system detected “unusual voting activity” on the title-a flag that appears when algorithms identify non-natural score distributions.

The chasm between platforms is revealing. Rotten Tomatoes: 99% positive from verified ticket buyers. IMDb: 1.3 out of 10 from the broader public. The unverified “All Audience” score on Rotten Tomatoes itself dropped to 27%-a 72-percentage-point plunge from the verified score.

What you’re seeing isn’t a film with universal appeal that critics somehow missed. You’re seeing the effect of curation. When you require ticket purchases to leave reviews, you’re not measuring “audience response.” You’re measuring the response of people who chose to pay money to see a documentary about Melania Trump, directed by Brett Ratner, executive-produced by the subject herself, marketed as a behind-the-scenes look at the Trump family’s return to power.

The Self-Selection Filter

Data from EntTelligence reveals who actually bought tickets. Nearly 53% of sales came from Republican-leaning counties. Theaters in rural areas contributed almost half the opening-weekend total. The audience skewed dramatically older-more than 70% were 55 or above-and 75% were white.

This isn’t representative of “audiences” in the aggregate. It’s a highly specific, ideologically aligned demographic. For this group, reviewing the film isn’t primarily an act of cinematic criticism. It’s political signaling, an expression of tribal affiliation.

But even granting extreme demographic self-selection, you’d expect some variance. Even among devoted Trump supporters, you’d find some who thought the film was too long, or preferred a different directorial approach, or wished for more substantive content. Human beings disagree, even within ideologically homogeneous groups.

The 99% consensus suggests something beyond self-selection. It suggests organization.

The $75 Million Question

Amazon MGM paid $40 million to acquire the documentary rights and a follow-up series. They spent another $35 million on marketing-a promotional budget equivalent to a mid-tier theatrical feature. Melania Trump herself received approximately $28 million from this deal, an unprecedented payment for a documentary subject while their spouse holds office.

The film opened to $7-8.1 million in its first weekend. For a documentary, this was strong. For a $75 million investment, it was catastrophic. To break even on such spending, a film typically needs to gross $100 million-plus globally. “Melania” will never approach that number theatrically.

But Amazon isn’t playing theatrical math. Kevin Wilson, head of domestic theatrical distribution at Amazon MGM, called the opening “very encouraging” and described it as the “first step in what we see as a long-tail lifecycle” on Prime Video. The theatrical release was never meant to recoup investment. It was a credibility play, a way to generate the “beloved by audiences” narrative that drives streaming subscriptions.

The 99% score is the centerpiece of that strategy. It allows Amazon to advertise the film as “#1 documentary of the last decade” and “audience favorite” despite universal critical condemnation. In Amazon’s internal calculations, that manufactured consensus is worth more than box office receipts.

The Algorithmic Audit

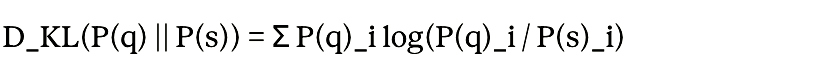

When data scientists calculate the probability of achieving a 99% positive consensus organically for a film with 7% critical approval, they use Kullback-Leibler divergence-a measure of how much one probability distribution differs from another. If the “true” quality of the film is represented by probability P(q), derived from critical reception and cross-platform audience data, and the observed Rotten Tomatoes verified score represents probability P(s), the divergence is calculated as:[

](https://substackcdn.com/image/fetch/$s_!sTJK!,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fa87808d6-a11b-4abc-8370-d634f05f6374_830x76.png)

For “Melania,” the divergence is extreme-so high that it indicates the verified audience input isn’t responding to the film’s quality but represents an independent variable controlled by external factors. In simpler terms: the reviews aren’t measuring the movie. They’re measuring the effectiveness of the coordination campaign.

This is what Professor Nik Bear Brown, who specializes in data validation and machine learning at Northeastern University, identifies as “zero confidence in the system’s integrity.” When a polarized film achieves 99% consensus, Brown notes, you’re not seeing 99% approval. You’re seeing 0% probability that the feedback is legitimate.

The Ratner Gambit

Brett Ratner hadn’t directed a major release since 2017, when allegations of sexual misconduct made him persona non grata in Hollywood. “Melania” was his comeback vehicle, granted through connections to the Trump family and facilitated by Amazon’s willingness to pay extraordinary sums for access.

Critics noticed. Their reviews focused not just on the film’s ideology but on its technical incompetence. “Ratner couldn’t find the humanity in a funeral,” one wrote. Despite unprecedented access-20 days of behind-the-scenes footage during a presidential transition-the film revealed almost nothing. It offered curated, sanitized moments: the First Lady selecting flowers, organizing seating charts, navigating White House logistics. Critics described it as watching “pure absence,” an $75 million void where documentary insight should exist.

The audience score of 99% praised the film’s “human warmth” and “intimate portrait.” Either critics and audiences watched completely different films, or one group’s assessment was manufactured to counter the other’s.

The Favor Currency

Jeff Bezos, who controls Amazon, has extensive business interests affected by federal policy. Antitrust investigations. Government cloud computing contracts worth billions. Regulatory frameworks for e-commerce and streaming. The Washington Post, which Bezos owns, has faced sustained attacks from Trump and his allies.

Industry analysts have suggested that the $75 million “Melania” investment-particularly the $28 million payment to the First Lady-functions as reputation insurance, a high-priced gift of favorable coverage during Trump’s second term. The 99% audience score becomes part of that gift, a data-backed narrative that the First Lady enjoys immense popular appeal.

This is reputation management as corporate strategy, and it only works because Rotten Tomatoes’ verification system can be exploited by anyone with sufficient resources. Amazon owns the studio producing the film. Amazon owns Mechanical Turk, the infrastructure ideal for coordinating exactly this kind of micro-task campaign. Amazon has the promotional budget to purchase however many verified tickets the operation requires.

The system was designed to prevent negative review bombing. It created a mechanism for positive review boosting. And when your studio, coordination infrastructure, and distribution platform are all owned by the same parent company, the cost of manufacturing consensus drops to nearly zero.

The Aggregator Crisis

For two decades, aggregation sites like Rotten Tomatoes have functioned as shorthand for quality. The Tomatometer became cultural currency, a quick-reference guide in an overwhelmed media landscape. Fresh or Rotten. Simple, legible, seemingly objective.

“Melania” breaks that system. A 7% critic score tells you what professional reviewers think. A 99% verified audience score was supposed to tell you what paying customers think. Instead, it tells you what $10,000 in strategic ticket purchases and coordinated review campaigns can accomplish.

The “Verified Hot” badge, once a signal of authenticity, has become a vector for manipulation. When verification requires only proof of purchase, and purchase is trivially cheap relative to marketing budgets, the verification becomes meaningless. Anyone with resources can verify themselves into a masterpiece rating.

Algorithmic detection catches some of this. IMDb flagged unusual voting activity. The dramatic score discrepancy between verified and unverified reviews on Rotten Tomatoes itself signals manipulation. But by the time detection occurs, the damage is done. The 99% score has already been promoted, quoted, embedded in marketing materials. It lives forever in search results and press releases, a manufactured data point that takes on the authority of objective measurement.

What the Silence Reveals

Amazon MGM has not disputed the 99% score or addressed questions about its legitimacy. Why would they? The score serves its purpose regardless of credibility. Casual browsers see “99% Verified Hot” and assume audiences loved it. Marketing teams deploy “audience favorite” in promotional materials. Prime Video features it prominently with its implausibly high rating intact.

The studio’s silence is strategic. Engaging with accusations of manipulation would only draw attention to the vulnerability. Better to let the 99% stand, let the “Verified” badge do its work, let algorithms and percentages perform their magic of transforming coordination into consensus.

But the silence also reveals something else: confidence that nothing will change. That platforms won’t strengthen verification beyond ticket purchase. That audiences won’t develop literacy in reading score manipulation. That the gap between critics and “audiences” can be manufactured indefinitely as long as you control the infrastructure, the distribution, and the ticket sales.

The 92-percentage-point divergence for “Melania” isn’t an anomaly. It’s a proof of concept. It demonstrates that with sufficient resources, any studio can purchase a masterpiece rating for any film, no matter how universally panned by critics, no matter how genuinely unpopular with broader audiences.

The only question is whether they’ll be as obvious about it next time.

When your documentary about the First Lady receives worse reviews than “Cats,” and your solution is to manufacture a 99% audience score with a sample size small enough to coordinate through group chat, you’re not demonstrating confidence in your product.

You’re demonstrating that you know exactly how much a data point costs, and exactly how little credibility matters when you own the platform where credibility is measured.

The score is 99%. The confidence is zero. And the difference between those numbers is what $75 million buys you in the age of algorithmic reputation management.